Project Description

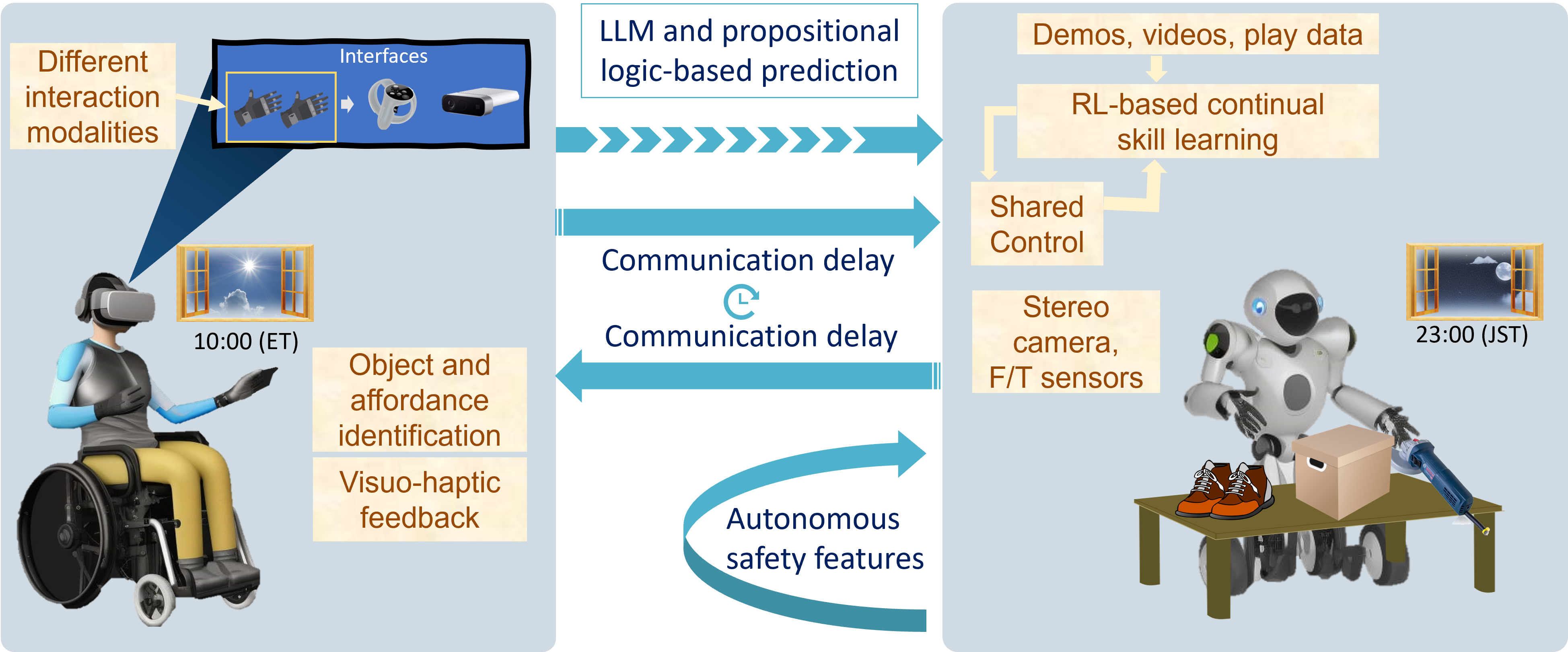

Robots can alleviate the burden of repetitive and dangerous tasks. Such robots need to be autonomous in a low-level but able to follow high-level human guidance. The interface must be such that tasks that take much burden can be semi-automatic (through shared control), leaving the high-level planning to the user through teleoperation. Collaboration of robot learning, and human guidance is necessary for solving unstructured problems. We propose a general shared autonomy framework (not targeted to specific robotic system) that will rely on Large Language Models (LLM) and propositional logic for action inference and predicting user intention, relying on an AI framework for continuously learning how to perform tasks.

PI

Rafael Cisneros-LimónPeriod

2023.11-2026.3Related Publications (including JRL contributions)

| Title | Authors | Conference/Book | Year | bib |