The Humanoid Lab is a part of the CNRS-AIST JRL, located at AIST, Tsukuba about 5km from the main campus of the University of Tsukuba. It is associated to the university through the Cooperative Graduate School System, which means that graduate students at the university can work at JRL as Trainees and RAs under the supervision of Prof. Kanehiro (Faculty of Cooperative Graduate School, IMIS).

The lab provides a unique opportunity for graduate students to work with Japanese and foreign research scientists on a wide variety of robot platforms and research topics. Our main research subjects include: task and motion planning and control, multimodal interaction with human and surrounding environment through perception, and cognitive robotics.

Most members of our lab are bilingual (some are quadrilingual!), hence, we encourage Japanese-speaking as well as English-speaking students to join our lab.

The lab is always looking for talented and motivated graduate students to join our group. Students must be accepted to the Master's or Doctoral Program in Intelligent and Mechanical Interaction Systems (IMIS), University of Tsukuba through the regular admission procedure (examinations held in summer and winter).

If you're interested, please contact the lab or Prof. Kanehiro directly before you start the application procedure.

(This page is maintained by current students.)

Research content

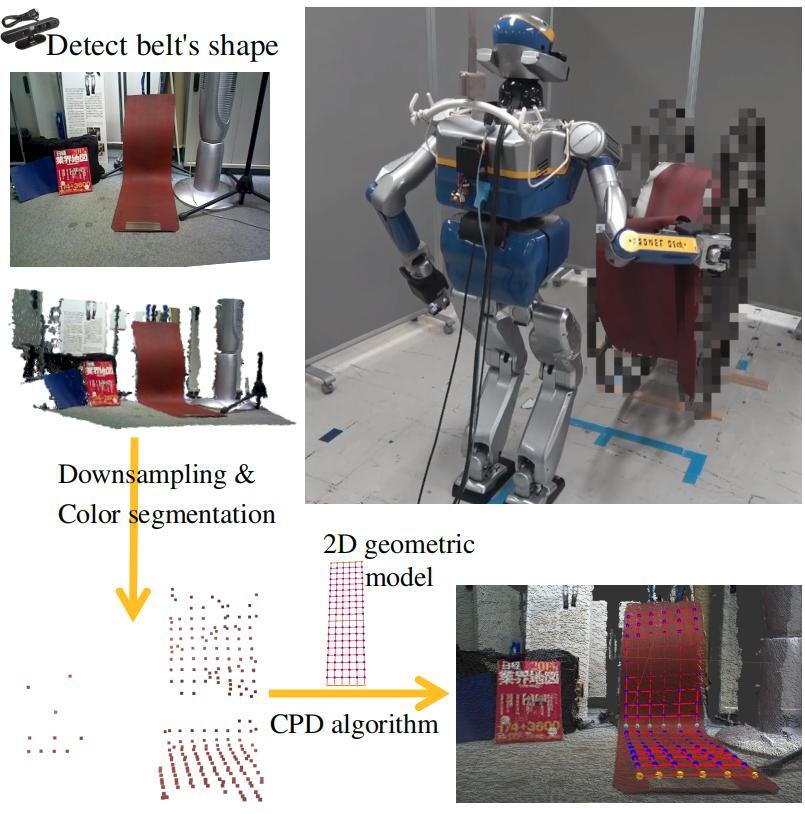

Vision-based Belt Manipulation by Humanoid Robot

Deformable objects are very common around us in our daily life. Because they have infinitely many degrees of freedom, they present a challenging problem in robotics. Inspired by practical industrial applications, we present our research on using a humanoid robot to take a long, thin and flexible belt out of a bobbin and pick up the bending part of the belt from the ground. By proposing a novel non-prehensile manipulation strategy “scraping” which utilizes the friction between the gripper and the surface of the belt, efficient manipulation can be achieved. In addition, a 3D shape detection algorithm for deformable objects is used during manipulation process. By integrating the novel “scraping” motion and the shape detection algorithm into our multi-objective QP-based controller, we show experimentally humanoid robots can complete this complex task.

sim2real: Learning Humanoids Locomotion using RL

Recent advances in deep reinforcement learning (RL) based techniques combined with training in simulation have offered a new approach to developing control policies for legged robots. However, the application of such approaches to real hardware has largely been limited to quadrupedal robots with direct-drive actuators and light-weight bipedal robots with low gear-ratio transmission systems. Application to life-sized humanoid robots has been elusive due to the large sim2real gap arising from their large size, heavier limbs, and a high gear-ratio transmission systems.

In this work, we investigate methods for effectively overcoming the sim2real gap issue for large-humanoid robots for the goal of deploying RL policies trained in simulation to the real hardware.

Link to YouTube video: here.

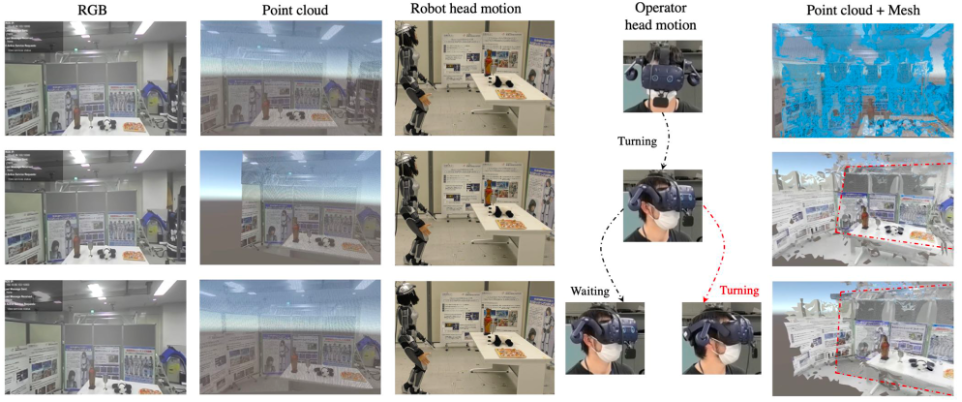

Enhanced Visual Feedback with Decoupled Viewpoint Control in Immersive Teleoperation using SLAM

During humanoid robot teleoperation, there is a noticeable delay between the motion of the operator’s and robot’s head. This latency could cause the lag in visual feedback, which decreases the immersion of the system, may cause some dizziness and reduce the efficiency of interaction in teleoperation since operator needs to wait for the real-time visual feedback. To solve this problem, we developed a decoupled viewpoint control solution which allows the operator to obtain the visual feedback changes with low-latency in VR and to increase the reachable visibility range. Besides, we propose a complementary SLAM solution which uses the reconstructed mesh to complement the blank area that is not covered by the real-time robot’s point cloud visual feedback. The operator could sense the robot head’s real-time orientation by observing the pose of the point cloud.

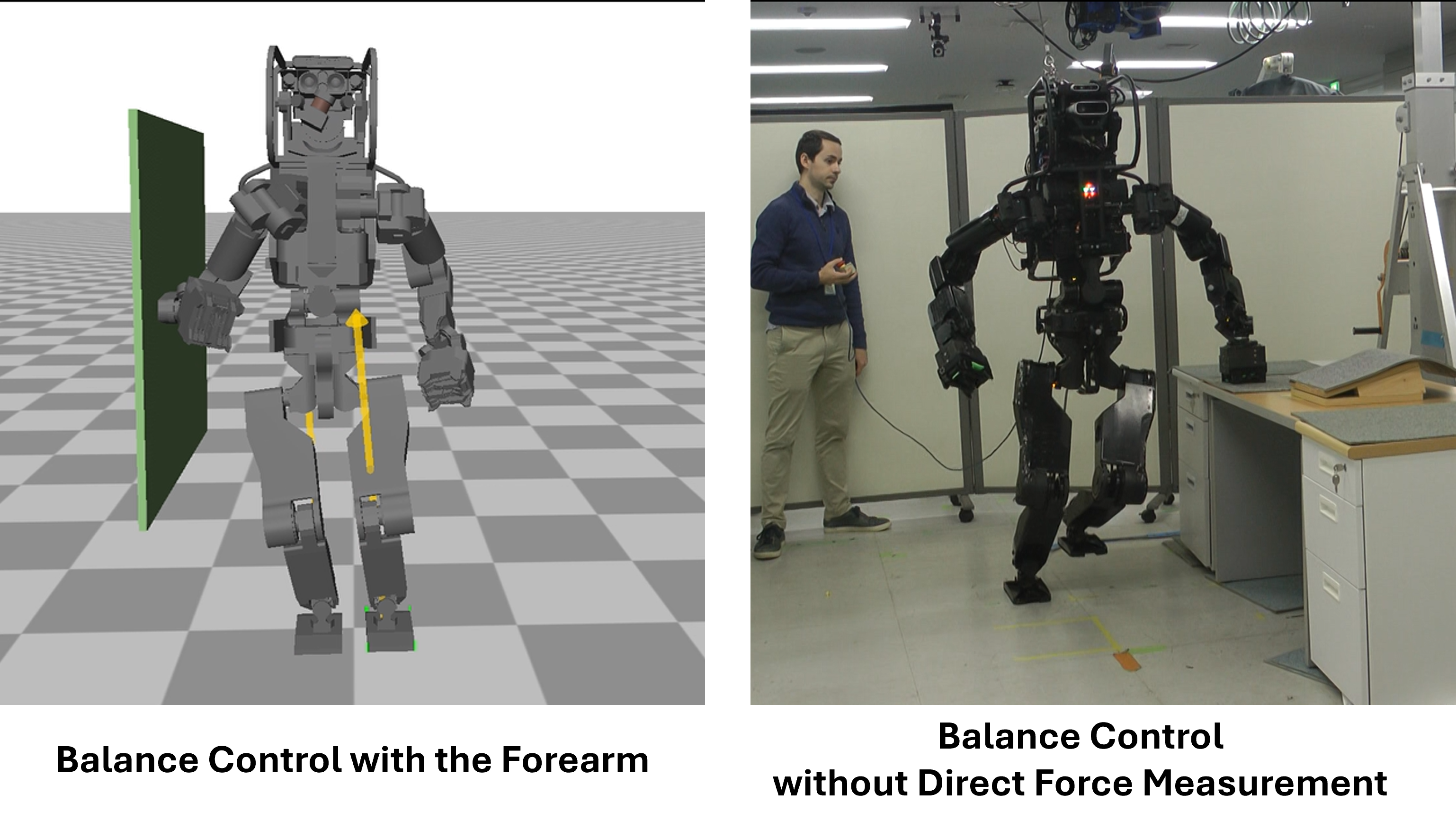

Multi-Contact Motions Using the Elbow or Knee of a Humanoid Robot via External Force Estimation

Humanoid robots are expected to work like humans. However, there are still some limitations that make this goal difficult. We focus on the limitation of using intermediate control points such as elbows or knees. While humans use their intermediate body parts, humanoid robots cannot do so. One reason is that humanoid robots lack force sensors there and typically have force sensors only on the end-effectors. To perform control, it is necessary to measure the output. One solution to this problem is to distribute sensors over the whole body, but this is unrealistic due to mechanical and electrical complexity. We adopt external force estimation based on the “Kinetics Observer.” The Kinetics Observer uses IMUs, joint encoders, and force sensors on the end-effectors as measurements, which are standard equipment in many humanoid robots. By applying the external force estimation from the observer, it becomes possible to perform force control without direct force measurement. The picture shows simulation and experimental scene demonstrating multi-contact motion without direct force measurement.

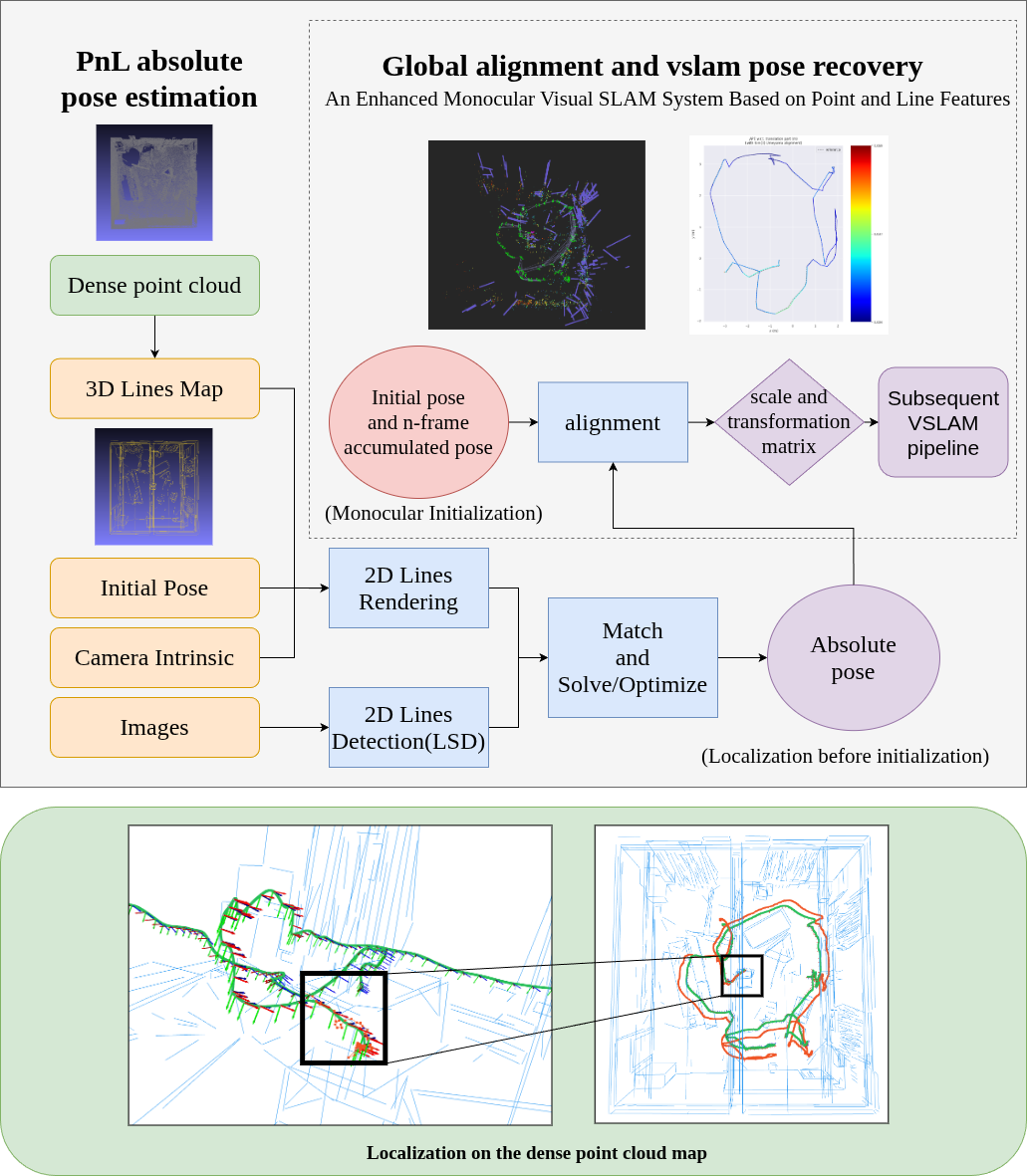

Monocular Visual SLAM that works with Pre-built Point Cloud Maps using Point and Line Features

Monocular Visual SLAM is attractive for its low cost and portability, but it suffers from scale ambiguity and typically cannot localize directly in heterogeneous prior maps such as dense LiDAR/3D-reconstruction point clouds. This project enables a monocular point-line SLAM pipeline to perform absolute localization in a pre-built dense point cloud map. We first extract 3D structural line segments from the dense point cloud offline, and then detect 2D line segments in incoming images online. By establishing robust 2D-3D line correspondences and solving a Perspective-n-Line (PnL) optimization, the system estimates metric absolute camera poses during initialization, recovering real-world scale without requiring additional sensors. After monocular initialization, multiple PnL poses are accumulated and aligned with the SLAM trajectory to compute a fixed Sim(3) transformation, which is then propagated throughout tracking and mapping to maintain globally consistent poses in the dense map coordinate frame.

Past research

Bipedal Walking With Footstep Plans via Reinforcement Learning

To enable application of RL policy controller humanoid robots in real-world settings, it is crucial to build a system that can achieve robust walking in any direction, on 2D and 3D terrains, and be controllable by a user-command. In this paper, we tackle this problem by learning a policy to follow a given step sequence. The policy is trained with the help of a set of procedurally generated step sequences (also called footstep plans).

We show that simply feeding the upcoming 2 steps to the policy is sufficient to achieve omnidirectional walking, turning in place, standing, and climbing stairs. Our method employs curriculum learning on the objective function and on sample complexity, and circumvents the need for reference motions or pre-trained weights. We demonstrate the application of our proposed method to learn RL policies for 3 notably distinct robot platforms - HRP5P, JVRC-1, and Cassie, in the MuJoCo.

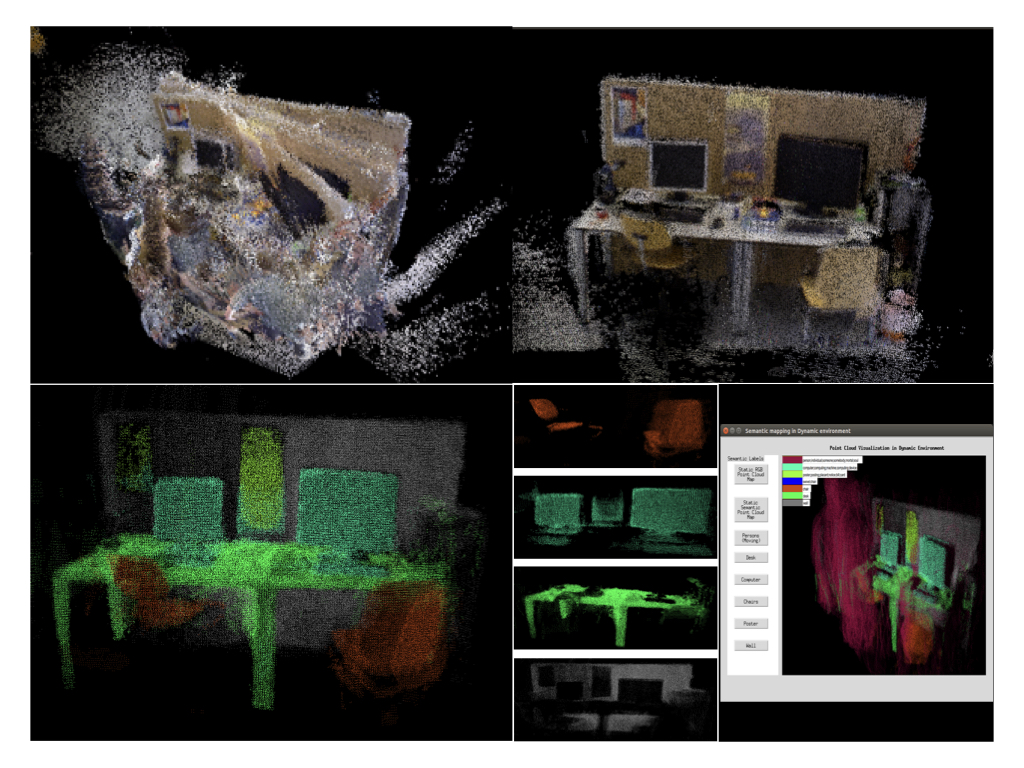

Simultaneous Localization and Mapping (SLAM) in Dynamic Environment

SLAM in dynamic environments has become a popular topic. This problem is called dynamic SLAM, where many solutions have been proposed to segment out the dynamic objects that introduce errors to camera tracking and 3D reconstruction.

However, state-of-the-art methods face challenges in balancing accuracy and speed. We propose a multi-purpose dynamic SLAM framework that allows users to select among various segmentation strategies, each suited for different scenes. If semantic segmentation is used, it supports object-oriented semantic mapping which is highly beneficial for high-level robotic tasks.

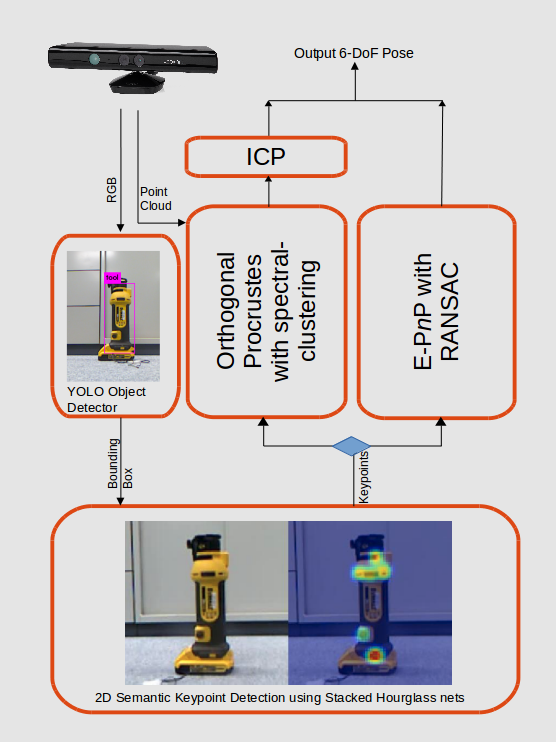

6-DoF Object Pose Estimation

For a humanoid robot to interact with objects, it must estimate the object’s 6-DoF pose—its 3D position and orientation (roll, pitch, yaw). This is usually done using the robot’s vision sensors.

Accurate estimation is critical for precise grasping and manipulation, especially under challenging lighting, occlusion, or with incomplete object models. This work explores Deep Learning-based techniques to enable robust, accurate pose estimation in such conditions.

Publications

| Title | Authors | Conference/Book | Year | bib | mov | prj | |

| Estimation-based Control of Forces Applied to Parts of Humanoids That Do Not Have Force Sensors | Y. Aoyama, M. Benallegue, A. Demont, F. Kanehiro | IEEE International Workshop on Advanced Motion Control | 2026 | ||||

| Improving Robotic Imitation Learning with Predicted Facial Motion Using Transformers | Y. Li, F. Kanehiro | IEEE/SICE International Symposium on System Integration | 2026 | ||||

| 3D Object Reconstruction Through Integration of Hyperspectral and RGB-D Imaging | C. Hong, G. Caron, N. Imamoglu, F. Kanehiro | IAPR International Conference on Machine Vision Applications | 2025 | ||||

| TACT: Humanoid Whole-body Contact Manipulation through Deep Imitation Learning with Tactile Modality | M. Murooka, T. Hoshi, K. Fukumitsu, S. Masuda, M. Hamze, T. Sasaki, M. Morisawa, E. Yoshida | IEEE Robotics and Automation Letters | 2025 | ||||

| Aligning objects as preprocessing combined with imitation learning for improved generalization | M. Barstugan, S. Masuda, R. Sagawa, F. Kanehiro | International Conference on Control and Robotics | 2024 | ||||

| Learning to Classify Surface Roughness Using Tactile Force Sensors | Y. Houhou, R. Cisneros-Limón, R. Singh | IEEE/SICE International Symposium on System Integration | 2024 | ||||

| Mc-Mujoco: Simulating Articulated Robots with FSM Controllers in MuJoCo | R. Singh, P. Gergondet, F. Kanehiro | IEEE/SICE International Symposium on System Integration | 2023 | ||||

| TransFusionOdom: Transformer-based LiDAR-Inertial Fusion Odometry Estimation | L. Sun, G. Ding, Y. Qiu, Y. Yoshiyasu, F. Kanehiro | IEEE Sensors Journal | 2023 | ||||

| Learning Bipedal Walking for Humanoids with Current Feedback | R. Singh, Z. Xie, P. Gergondet, F. Kanehiro | IEEE Access | 2023 | ||||

| Dual-Arm Mobile Manipulation Planning of a Long Deformable Object in Industrial Installation | Y. Qin, A. Escande, F. Kanehiro, E. Yoshida | IEEE Robotics and Automation Letters | 2023 | ||||

| CertainOdom: Uncertainty Weighted Multi-task Learning Model for LiDAR Odometry Estimation | L. Sun, G. Ding, Y. Yoshiyasu, F. Kanehiro | International Conference on Robotics and Biomimetics | 2022 | ||||

| Learning Bipedal Walking on Planned Footsteps for Humanoid Robots | R. Singh, M. Benallegue, M. Morisawa, R. Cisneros-Limón, F. Kanehiro | IEEE-RAS International Conference on Humanoid Robots | 2022 | ||||

| Enhanced Visual Feedback with Decoupled Viewpoint Control in Immersive Humanoid Robot Teleoperation using SLAM | Y. Chen, L. Sun, M. Benallegue, R. Cisneros-Limón, R. Singh, K. Kaneko, A. Tanguy, G. Caron, K. Suzuki, A. Kheddar, F. Kanehiro | IEEE-RAS International Conference on Humanoid Robots | 2022 | ||||

| Rapid Pose Label Generation through Sparse Representation of Unknown Objects | R. Singh, M. Benallegue, Y. Yoshiyasu, F. Kanehiro | IEEE International Conference on Robotics and Automation | 2021 | ||||

| Visual SLAM framework based on segmentation with the improvement of loop closure detection in dynamic environments | L. Sun, R. Singh, F. Kanehiro | Journal of Robotics and Mechatronics | 2021 | ||||

| Multi-purpose SLAM framework for Dynamic Environment | L. Sun, F. Kanehiro, I. Kumagai, Y. Yoshiyasu | IEEE/SICE International Symposium on System Integration | 2020 | ||||

| Instance-specific 6-DoF Object Pose Estimation from Minimal Annotations | R. Singh, I. Kumagai, A. Gabas, M. Benallegue, Y. Yoshiyasu, F. Kanehiro | IEEE/SICE International Symposium on System Integration | 2020 | ||||

| APE: A More Practical Approach To 6-Dof Pose Estimation | A. Gabas, Y. Yoshiyasu, R. Singh, R. Sagawa, E. Yoshida | IEEE International Conference on Image Processing | 2020 |

Student Members

| Name | Grade | Email (replace the _*_ with @) |

|---|---|---|

| Shimpei Masuda | Ph.D. 3rd Year | masuda.shimpei_*_aist.go.jp |

| Cheng Hong | Ph.D. 2nd Year | cheng.hong_*_aist.go.jp |

| Yitong Li | Ph.D. 2nd Year | li.cornel_*_aist.go.jp |

| Yu Nishijima | master 2nd Year | y.nishijima_*_aist.go.jp |

| Tasuku Ono | master 2nd Year | ono.tasuku_*_aist.go.jp |

| Yunosuke Aoyama | master 2nd Year | aoyama.y0093_*_aist.go.jp |

| Zenong Liu | master 2nd Year | zenong.liu_*_aist.go.jp |

| Sunio Morisaki | master 1st Year | sunio.morisakiromero_*_aist.go.jp |

| Kotaro Kajitsuka | master 1st Year | tactile.kajitsuka_*_aist.go.jp |

| Katsuki Tanabe | master 1st Year | tanabe.scone_*_aist.go.jp |

| Sebastian Joya Paez | master 1st Year | s.joyapaez_*_aist.go.jp |

| David Mesaros | research student | mesaros.d_*_aist.go.jp |

| Rohan Pratap Singh | Graduated (Ph.D.) | |

| Yili Qin | Graduated (Ph.D.) | |

| Leyuan Sun | Graduated (Ph.D.) | |

| Xinchi Gao | Graduated (Masters) |